I'm working on a new product :)

For the past month, I’ve been building a new SaaS product, and today I’m excited to share a sneak peek of it with you!

This is particularly exciting for me, as I haven't launched a paid side project since Engine. And although I’ve built things between then and now, none have sparked my excitement enough to bring them to market.

So what makes this product different?

I look for a few things when I build a side project. I want to build a product that is B2B, SaaS, and solves a problem I personally experience. I also want to build something that's feasible to build as a solo developer. And, I want to build something thats easily "pivotable", meaning most of the code can be reused if there's actually a different problem I need to solve.

It's hard to find something that checks those boxes, but this product does.

I decided to build this product after working in the startup world these past two years. During this time, I kept witnessing a consistent problem over and over. And it's a problem that I've also experienced frequently at Engine and Skiwise.

And the problem is this: products break – but, testing is costly. For startups, achieving both development speed and product stability can seem essentially impossible.

The status quo

Currently, the way to prevent products from breaking is by writing automated tests. These tests define the steps needed to do a task in your product, and run automatically when you deploy changes.

For example, an automated test that verifies an "Edit profile" form is working might look like this:

import { test, expect } from '@playwright/test';

test('allows user to edit profile and save changes', async ({ page }) => {

await page.goto('https://yourapp.com/profile/edit');

await page.fill('input[name="name"]', 'John Doe');

await page.fill('input[name="email"]', 'john.doe@example.com');

await page.click('button:has-text("Save Changes")');

const successMessage = await page.locator('text=Profile updated successfully');

await expect(successMessage).toBeVisible();

});Apps can have hundreds or even thousands of these tests and they all get run before every new update is deployed. Awesome!

But wait... Do you notice something with the test above? What if we update our app and rename the "Save Changes" button? Or add a 3rd input to the edit profile form? Or move the edit profile page elsewhere?

This is the root problem with automated testing. They will test your functionality, but they're extremely brittle to changes. This makes it difficult to have complete test coverage while also pushing new features as fast as a startup needs to.

The other problem with automated testing is they are time consuming to write. This is a simple example, but commonly your tests are much more complicated. And again, multiply this work 100 times over.

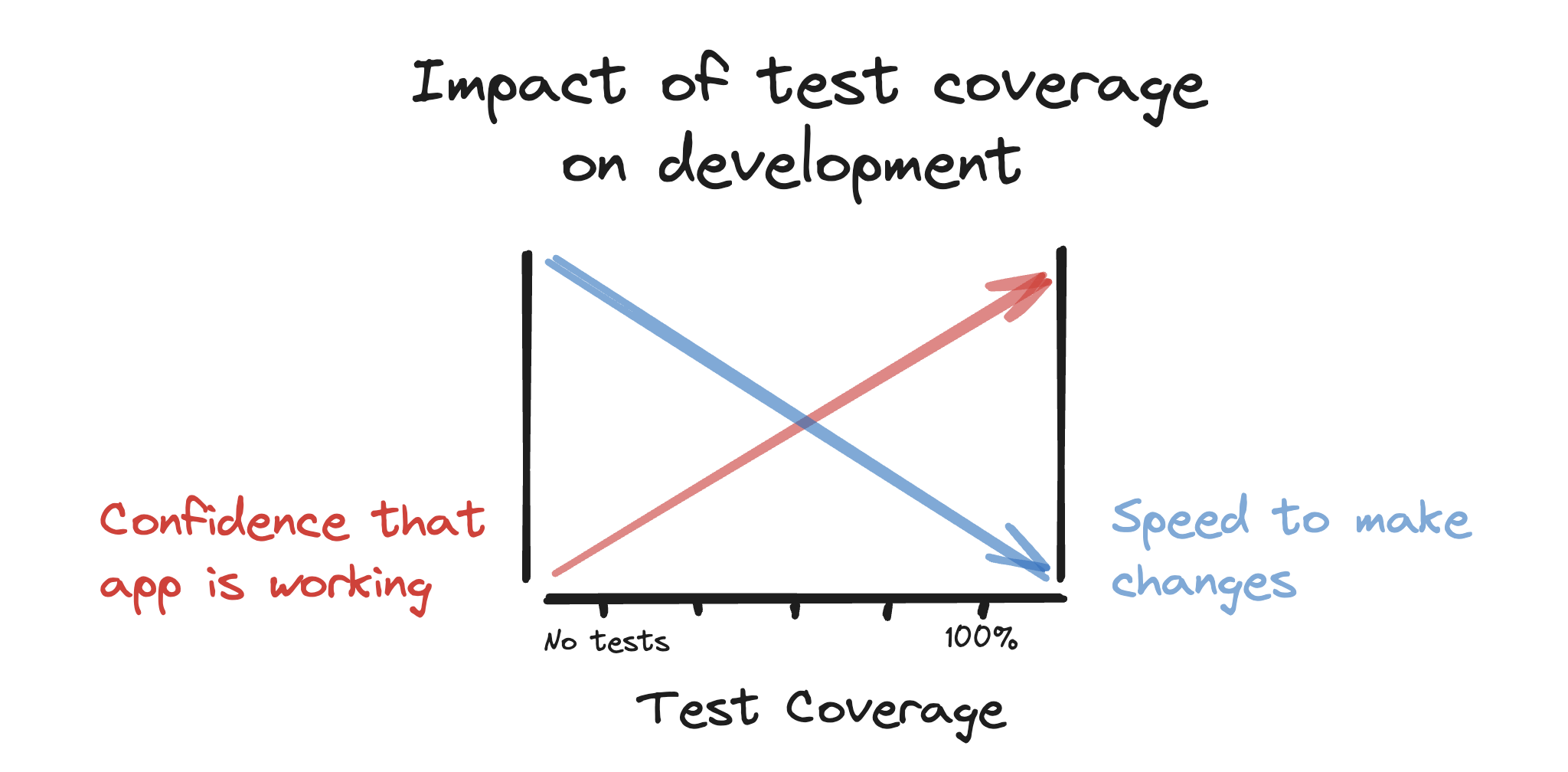

Essentially, automated testing code follows an efficiency graph like so:

As you can see, adding more tests increases your confidence that your app works, but also hinders your development speed at the same time.

The problem

For most companies – the fact they have to write and maintain automated tests isn't a problem. The value of testing outways the cost towards productivity. That's because typically a new feature is never as valuable as your existing ones.

But, for startups this typically isn't true.

A startup exists to solve problems for their customers. A product is the proposed solution. Tests ensure the solution keeps functioning as intended. But here’s the bigger question: what if your product isn’t the right solution to begin with?

Some argue that you should always test your product rigorously, but I believe testing should come with a purpose. That purpose being to ensure your product keeps solving the problem for your customer.

If the goal of a test is to ensure your product continues solving the problem, shouldn’t the first priority be validating that your product is solving the problem in the first place?

Shouldn't you ensure that it’s a viable business before focusing on its technical reliability with testing?

Most startup founders would agree with me, therefore, you hear this common phrase around the startup world a lot:

"Move fast and break things" - Mark Zuckerberg

But, I believe we can do even better than this mantra.

Move fast and don't break things

And that brings us to the solution I've been building for the past month. I built a tool that automatically tests if your product is fully functional, and does it without the overhead of traditional automated tests.

My tool handles the entire job of automated testing for you, without any micromanagement needed on your part. Instead of writing tests, you simply define broad goals that you want a user to be able to accomplish.

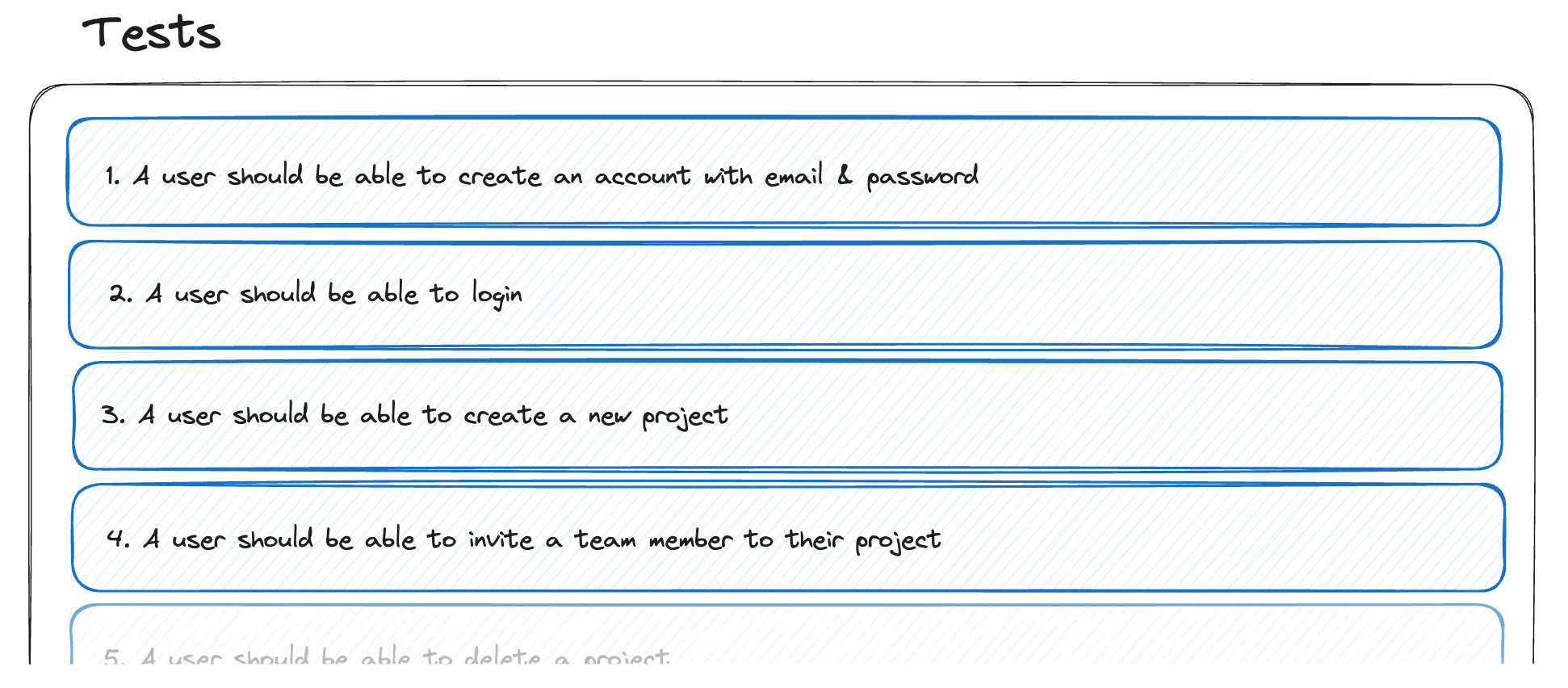

You don't do this through code, but through simple explanations of end-user experiences. So your testing suite will look something like this:

Through these broad goals – AI will walk through your site and generate the specific tests you need automatically. Essentially writing your entire automated testing suite for you.

Then, on any code change, we will run those tests against your product to ensure your code is still functioning exactly as it was before.

But, what if a test fails?

Unlike traditional tests that only have specific instructions, our approach includes both the specific instructions and a broader goal for each test. This means a test might fail on a specific detail, but still meet the broader goal.

For example, if the goal is “a user should be able to edit their profile,” deploying a code change that renames the “Save Changes” button to “Save” would cause a specific test to fail. However, from the user’s perspective, this product is still functioning perfectly.

And truly, this test shouldn't fail. You want your tests to ensure that your product is functional. But, in the real world tests almost always fail from a product change that doesn't break the user experience.

Here’s where we shine: we can determine if your product is broken or if it's just a code change that means you need to update your test. And if it's the latter, we'll heal your test automatically with just the press of a button.

So, for the example above, we'll update the specific instructions to check for a "Save" button instead of a "Save changes" button, re-run the test, then save it to be updated moving forwards.

No more pushing code changes simply to update your testing code.

Another advantage of this is that we remove the false alerts that you would otherwise get frequently. Since we can identify when a test is a product breakage and not just a product change, those high priority alerts mean something!

I'll be launching soon, there's still functionality to add but I have the core working and I'm excited to share it!

Anyways that's all, thanks!